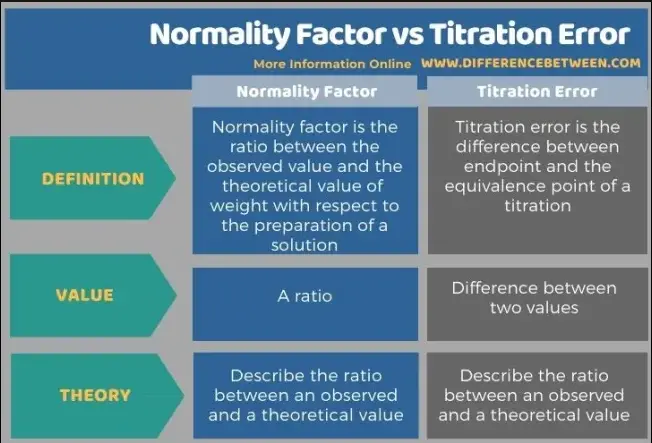

Chemical analysis forms the backbone of scientific inquiry and industrial quality control, where precision and accuracy are paramount. Differences in measurement methods, specifically normality factor and titration error, significantly influence the accuracy of chemical analyses. These concepts are fundamental yet distinct, each playing a crucial role in the quality of analytical results.

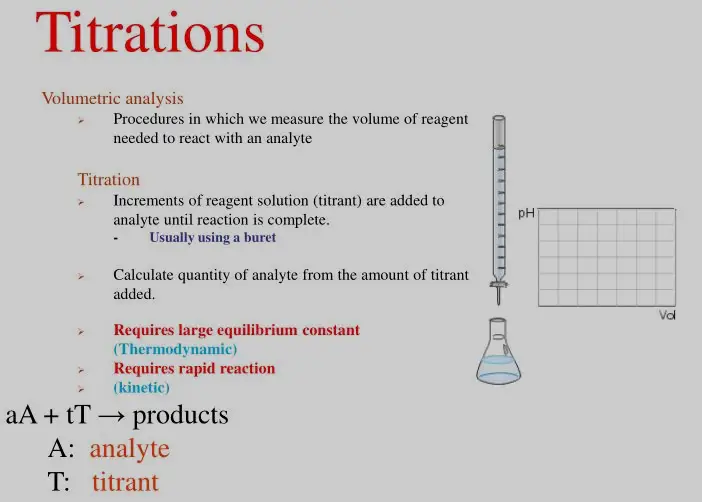

The normality factor is a correction factor that adjusts the concentration of a titrant used in titration methods based on its reactivity. Titration error, on the other hand, refers to discrepancies between the actual and measured values during a titration, arising from various sources such as human error, equipment inaccuracies, and chemical properties. Understanding these two factors is essential for improving the reliability of chemical titration results.

While both concepts aim to refine the accuracy of chemical titration, their applications and implications differ significantly. Accurate application of the normality factor ensures the correct stoichiometric calculations, while minimizing titration errors is crucial for achieving reliable and reproducible results. This distinction is critical for professionals in fields ranging from pharmaceuticals to environmental science, where even minor inaccuracies can lead to significant consequences.

Normality Factor

Definition and Significance

The normality factor, often referred to as the equivalence factor, is a critical concept in the realm of analytical chemistry, particularly in titration processes. It represents the reactive capacity of a solution, which is essential for determining the precise chemical concentration through titration. This factor is not merely a measure but a cornerstone for ensuring that stoichiometric relationships are accurately reflected during calculations.

In practical terms, normality defines the number of equivalents per liter of solution. It is particularly crucial when the reaction involves multiple protons or hydroxide ions. For example, in acid-base titrations, normality helps chemists understand how many molecules of acid can react with a base, thereby ensuring accurate formulation and mixture compositions in industrial applications, such as pharmaceuticals and manufacturing.

Calculation Methods

Calculating the normality of a solution involves several straightforward steps:

- Identify the Equivalent Weight: This depends on the type of reaction (acid-base, oxidation-reduction, etc.).

- Measure the Mass of Solute: Weigh the chemical substance that will dissolve in the solvent.

- Determine the Volume of the Solution: Measure the total volume of the solution where the solute is dissolved.

- Calculate Normality: Use the formula:Normality=Number of EquivalentsLiters of SolutionNormality=Liters of SolutionNumber of Equivalentswhere the number of equivalents is calculated by dividing the mass of the solute by its equivalent weight.

Factors Affecting Normality

Several factors can alter the normality of a solution:

- Temperature Changes: Temperature can affect the volume of the solvent, thereby changing the concentration.

- Chemical Degradation: Over time, some chemicals degrade, which may alter the concentration of the active agent in the solution.

- Precision in Measurement: Accurate measurements of chemical weight and solution volume are crucial to determining true normality.

Titration Error

Definition and Types

Titration error refers to inaccuracies that occur during the titration process, affecting the final outcome of an analysis. These errors can be classified into two main types:

- Systematic Errors: These are predictable inaccuracies that consistently occur in one direction, either always higher or always lower than the actual value.

- Random Errors: These occur without predictable pattern and can be caused by measurement variability, environmental factors, or human error.

Understanding these errors is essential for improving the precision and reliability of titration outcomes.

Common Causes

Common sources of titration error include:

- Improper Calibration of Equipment: Instruments like pipettes and burettes must be calibrated regularly to ensure accuracy.

- Observer Error: Human error in reading measurements, especially at the end point of a titration.

- Inconsistent Reagent Quality: Variability in the quality or concentration of titrants can lead to significant errors in the final results.

Impact on Results

The consequences of titration errors are not trivial. They can lead to:

- Misinterpretation of Data: Inaccurate data can lead to incorrect conclusions, affecting research and development.

- Quality Control Issues: In industries like pharmaceuticals, errors in titration can result in products that do not meet required standards.

- Economic Losses: Incorrect chemical formulations can lead to wastage of resources and financial losses.

Comparing Normality and Error

Key Differences

Understanding the difference between normality factor and titration error is essential for any chemist or technician involved in laboratory analysis. The normality factor is a calculated value that adjusts the concentration of a titrant to reflect its exact chemical reactivity. This factor is crucial for ensuring that stoichiometric calculations in titrations are accurate and reflect the true nature of the chemical reaction.

On the other hand, titration error encompasses the range of inaccuracies that may occur during the titration process. These errors affect the measurement directly, leading to results that can deviate from the true value. Systematic errors lead to consistent deviation in one direction, while random errors can vary in magnitude and direction, making them more unpredictable.

The key differences between these two concepts lie in their application and impact:

- Nature: Normality is a corrective factor; titration error is a measure of inaccuracy.

- Purpose: Normality adjusts calculations; errors indicate precision issues.

- Control: Normality can be precisely calculated and controlled; errors require rigorous methodological corrections to minimize.

Practical Examples

To illustrate the differences, consider these examples:

- Acid-Base Titration: When titrating a base with an acid, the normality factor ensures that the acid’s concentration accurately represents its capacity to neutralize the base. If the titration is performed with an improperly calibrated burette, the titration error may lead to an overestimation or underestimation of the base’s concentration.

- Redox Titration: In redox titrations, the normality factor adjusts for the oxidizing or reducing strength of a solution. Errors in this type of titration can arise from incorrect endpoint detection, significantly affecting the outcome of the analysis.

Measuring and Minimizing Errors

Equipment Calibration

Calibration of equipment is one of the most effective ways to reduce titration error. Regular calibration ensures that instruments measure volumes accurately, which is crucial for precise titration results. The steps typically involved in calibration include:

- Checking for Consistency: Regular checks to ensure that devices like pipettes, burettes, and volumetric flasks consistently deliver accurate volumes.

- Adjustment: Adjusting instruments according to manufacturer guidelines if discrepancies are found.

- Documentation: Keeping records of calibration data to track the performance over time and identify when recalibration is needed.

Technique Improvement

Improving technique is equally important to ensure that titrations are performed without introducing additional errors. Key areas to focus on include:

- Proper Mixing: Ensuring that solutions are thoroughly mixed to avoid concentration gradients within the titration flask.

- Endpoint Detection: Using appropriate indicators to accurately determine the titration endpoint. For complexometric titrations, this might include electronic devices that detect color changes more accurately than the human eye.

- Training and Practice: Regular training sessions for laboratory personnel to refresh their skills and introduce new techniques that can improve accuracy.

Frequently Asked Questions

What is Normality Factor?

Normality factor refers to a corrective coefficient used in titrations to account for the exact concentration of the titrant. This factor adjusts the calculated concentration to reflect real-world conditions, ensuring that the stoichiometric calculations in reactions are precise.

How Does Titration Error Occur?

Titration error can occur due to several factors, including incorrect handling of equipment, environmental factors affecting the reactants, and inherent inaccuracies in measurement devices. Understanding these causes helps in identifying and mitigating potential errors in the lab.

Why is Minimizing Titration Error Important?

Minimizing titration error is crucial for achieving accurate and consistent results in chemical analysis. It ensures the reliability of the data, which is vital for research validity, quality control, and safety in various industries.

Can Normality Factor Correct All Types of Titration Errors?

No, the normality factor is specifically designed to correct for the concentration variations of the titrant. It does not address errors caused by procedural mistakes or equipment malfunctions, which must be managed through rigorous training and maintenance protocols.

Conclusion

The exploration of normality factor and titration error highlights the complexities involved in achieving accurate chemical analyses. These elements are critical for ensuring the reliability of results, which in turn impacts numerous sectors dependent on precise chemical measurements. By understanding and applying these concepts correctly, professionals can significantly enhance the quality of their analytical processes.

The importance of accurate chemical titration extends beyond the laboratory. In industries where safety and quality are non-negotiable, mastering these measurement techniques is not just beneficial but essential. Through continuous improvement and adherence to best practices, the accuracy and reliability of chemical analyses can be maximized, benefiting both the scientific community and society at large.